티스토리 뷰

이전 포스팅에서 이어집니다.

정말 간단하게 엄지 척, 엄지 다운을 detect해서 이모지를 보여주는 예제를 해보겠습니다.

[1] HandGestureProcessor 만들기

HandGestureProcessor 를 아래와 같이 만들어주세요

현재 화면의 center보다 엄지손가락의 TIP 포인트가 위에 있으면 엄지척,

밑에 있으면 엄지 다운으로 인식해주는 로직입니다.

(실제로 이 기능 구현하려면 더 디테일하게 로직짜야할텐데, 엄청 간단하게 시작해볼게요-!)

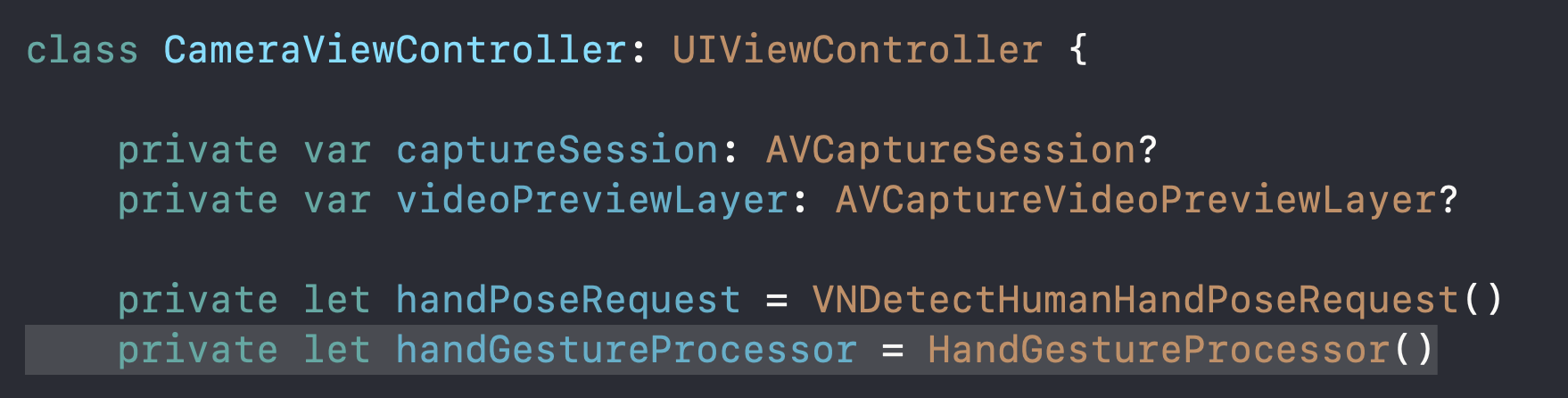

그리고 뷰컨트롤러가 processor 가지고 있게 해주세요

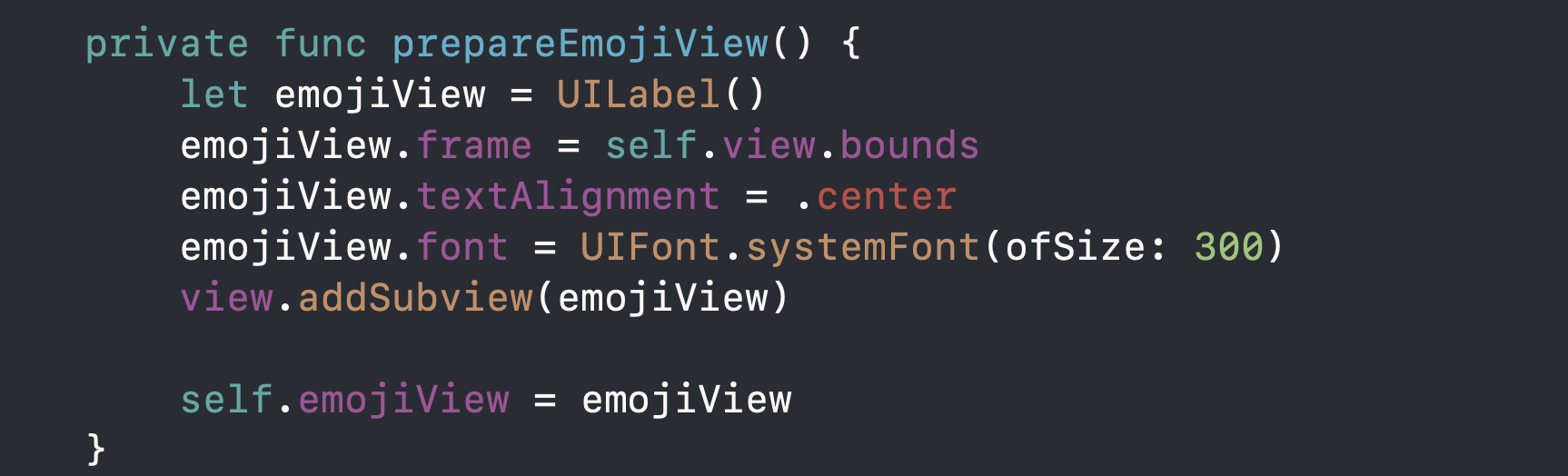

[2] emojiView 만들기

emojiView만들어서 view에 붙여주겠습니다.

[3] captureOutput 메소드에서 confidence가 너무 낮지 않은 thumbTipPoint만 processPoints함수에 넘겨주기

저번 포스팅에서 thumbTipPoint 알아내는 것까지 했는데요

confidence(정확도)가 0.3보다 크지 않으면 무시하는 코드랑

thumbTipPoint를 processPoints함수에 넘겨주는게 추가되었습니다.

[4] processPoints 함수 만들기

그럼 processPoints 함수를 만들어줄게요-!! ⭐️ 오늘의 핵심 ⭐️

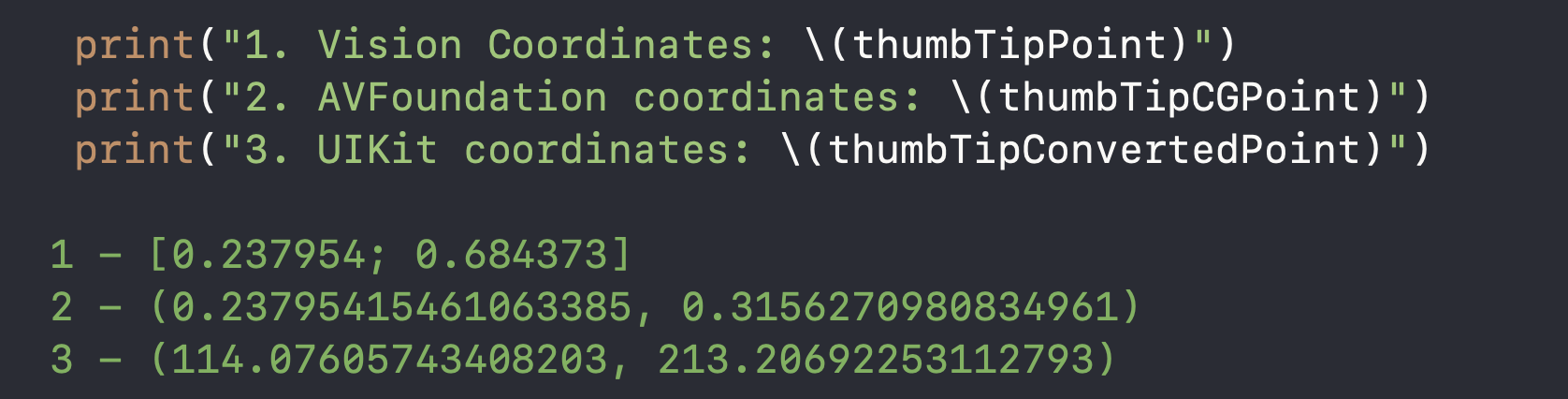

1) Vision coordinates -> AVFoundation coordinates -> UIKit coordinates로 컨버팅 해주기

예를 들면 이렇게 나옵니다.

우리는 현재 화면의 center값(UIKit 좌표값)으로 비교해줘야하니까 UIKit coordinate를 구해줘야합니다.

2) handGestureProcessor로부터 현재 손동작 state를 구해서 이모지 띄워주기

근데 잘 안됩니다...ㅠㅠ 이 움짤은 움짤 업로드 용량 때문에 그나마 잘 되는 부분 짤라서 만든것...!

전체 코드

Reference

developer.apple.com/documentation/vision/detecting_hand_poses_with_vision

Apple Developer Documentation

developer.apple.com

'🍏 > Vision' 카테고리의 다른 글

| [Vision] 손동작(Hand Pose)으로 카메라 컨트롤 하기 (0) | 2020.07.19 |

|---|---|

| [Vision] Detect Hand Pose with Vision (1) - Hand Landmark, VNDetectHumanHandPoseRequest, VNRecognizedPointsObservation (0) | 2020.07.04 |

- Total

- Today

- Yesterday

- Django Firebase Cloud Messaging

- github actions

- drf custom error

- 장고 URL querystring

- 구글 Geocoding API

- Sketch 누끼

- ipad multitasking

- cocoapod

- Django FCM

- flutter deep link

- PencilKit

- 장고 Custom Management Command

- DRF APIException

- Flutter Clipboard

- 플러터 얼럿

- flutter dynamic link

- ribs

- Flutter getter setter

- Flutter 로딩

- SerializerMethodField

- Dart Factory

- Flutter Spacer

- Flutter Text Gradient

- flutter 앱 출시

- Python Type Hint

- METAL

- 플러터 싱글톤

- Watch App for iOS App vs Watch App

- flutter build mode

- Django Heroku Scheduler

| 일 | 월 | 화 | 수 | 목 | 금 | 토 |

|---|---|---|---|---|---|---|

| 1 | 2 | 3 | 4 | 5 | 6 | 7 |

| 8 | 9 | 10 | 11 | 12 | 13 | 14 |

| 15 | 16 | 17 | 18 | 19 | 20 | 21 |

| 22 | 23 | 24 | 25 | 26 | 27 | 28 |

| 29 | 30 |